[I’ve published this now, but may continue to update it]

One of the many frustrations of using the current crop of social networking platforms is that they are each controlled by a single large company or organization that makes all the decisions for us, usually giving us little choice.

I am writing this particular article out of frustration with the moderation (or censorship) approaches of those large social media organizations like Meta (Instagram, Facebook, etc.), Google (particularly Drive and YouTube) and X (formerly know as Twitter). In the case of Meta and Google, they each clearly want to set themselves up as the arbiter of what is right and “wrong”, for the whole world, and the user must just accept or not use the service.

In the case of X, there is almost complete freedom over what may be posted, but there is very little ability for someone to control what is shown to them or to people they want to protect such as children.

The Naturist Perspective

I am looking at this issue from a naturist perspective because that context illustrates the problems very well, and also because, as a naturist myself, I have seen how problematic it can be on those other platforms. Adopting this perspective, which primarily is concerned with how “nudity” is managed, limits the discussion somewhat, as I will not be considering other examples such as malicious communications, spam, violence, etc., but others can look at those aspects if they want.

To a naturist, nudity is a completely matter-of-fact part of everyday life; it is a healthy and wholesome state that has no particular connection with sexual activity. The human body is not considered to be “disgusting” – rather it is celebrated in all of its diversity – and consequently the whole issue of body shame all but disappears.

There are many people who don’t subscribe to that view, and that is entirely for them to decide for themselves: if they want to wear clothes all the time, then that is their choice. However, they do not (or should not) have the right to impose that choice on other people. I know people who are absolutely terrified by the sight of a spider, but that does not mean spiders, or images of spiders, should be eliminated.

If someone is offended by the sight of a human body, then they can look away. Moderation in Bluesky (or rather, the AT protocol – see below) is primarily about providing people with the means to “look away”, to be left alone, or to protect the people they have a responsibility to protect, such as children.

As an aside, it is worth noting that this choice also does bring a danger that the “echo chamber” effect could be exacerbated, making it harder to achieve normalization of nudity because people may automatically block things they don’t understand, thus preventing them from ever experiencing it and coming to understand it. However, that is a problem in the real world as well.

Moderation: who decides?

The stated objective of Bluesky is to create “a social app that is designed to not be controlled by a single company”, and the whole architecture of the AT protocol (Authenticated Transfer Protocol) on which Bluesky is built is designed with that in mind.

That principle applies to the issue of moderation just as much as it does to everything else and, in order to achieve that, moderation is designed as a separate service. Anyone can set up their own moderation service and users (or their apps) can decide whether or not to subscribe to that particular moderation service. This has the potential, in principle, to achieve the level of choice that I referred to in the last section.

It is particularly good that the user is able to decide how labelled content is handled, or even whether to ignore a label completely, within the limits set by the app they use. If the user finds a particular app too restrictive, they have the option to use a different app instead, though right now that is Hobson’s Choice, as Bluesky is currently the only app running on the AT protocol platform.

A full description of the approach may be found in the blog article: Bluesky’s Moderation Architecture. Note that, strictly, that article should be titled “AT Protocol’s Moderation Architecture” because, even though AT is designed by the Bluesky organization, it is not specific to the Bluesky App itself – other apps are, or can/will be, available.

I do think the Moderation Architecture is very well-designed and really has the potential to provide the choice that is needed, but only as long as people can be bothered to use it properly. (More about that important last point later.)

Labels

A moderation service (or “labeller”) in AT operates by creating various labels that may be applied to content (and to content providers). The nature of those labels is (mostly) not defined by the AT specification, as that is the role of the labeller, but there are various actions that may be associated with a label, such as hiding or blurring the content or attached images/videos of a post or posting a warning, or just ignoring the label.

The basic idea is that there are repositories that hold the posts that people make when using Bluesky (or other AT apps in the future), there are apps that people use to get hold of those posts and present them to the user, and the apps use the labels provided by whatever labelling services have been selected by the user, to decide how or whether to present those posts to that user.

Labellers can decide how they will determine what labels to apply: for example by receiving reports from users of their labelling service, or by using automated analysis (which has been shown by Meta and Google, as well as Bluesky itself, to be very unreliable at the moment anyway). They can also provide an appeals procedure for labels to be challenged, and the Bluesky moderation service itself does this through the Bluesky app.

There is a technical specification for labels, which defines how labels should be represented but doesn’t define what labels should exist – that is for labelling services to decide. There is also a guide called Labels and moderation, which discusses a mixture of how labels work, examples of labels, and how Bluesky has implemented moderation in its own app.

The nature of labels

The technical labels specification, for good reason, does not define what kinds of labels exist (though there are some exceptions to this – called Global label values) – a label is a label; it says something about the content to which it refers, and that is it. This is a good principle, but it means that there is scope for confusion when it comes to using the platform. This is apparent in the Labels and moderation document.

A label could be expressing, for example:

- some factual assertion about a post, such as whether an erect penis is clearly visible in the image (though even that could be a matter of opinion in some cases – e.g. how many pixels constitute a penis?), or

- some assertion about a post that is actually just a matter of opinion, such as “adultOnly”, or

- some action that should be taken by the app regarding a post, such as “hide” or “warn”.

The fact that this is not clearly defined can be confusing, and the guide actually makes statements that suggest such confusion, such as stating that a label value porn “puts a warning on images and can only be clicked through if the user is 18+ and has enabled adult content”. In fact, a label doesn’t do anything of the sort – it is just a label: the app decides what action to take depending on how it interprets that label and what settings the user has chosen.

This may just be sloppy writing, or it may be a failure to distinguish between the principles of the AT protocol and the decisions that have been made specifically for the Bluesky (or any other) app. Either way, it suggests muddled thinking on the part of the writer and is certainly very confusing to a reader.

Self-labelling and global label values

It is possible for a poster to apply a label to a post at the time they make it, called self-labelling. However, self-labelling can only make use of the “global values” defined by the protocol itself, namely: !hide, !warn, !no-unauthenticated, porn, sexual, nudity, and graphic-media. It is worth looking into these in more detail as they are currently the primary means used to moderate content in practice.

The first three of these define actions, such as to deny access to people who are not logged in. They don’t specify any reason for the action, and cannot be overridden. I think there is a serious problem with these as they provide a lazy way for service providers to control what people can and can’t see, without any need to justify their decisions.

The rest are descriptive; the last one being described as relating to violence or gore. For the purpose of the following discussions, I will concentrate on the other three: porn, sexual, and nudity, which are assertions about a post content or its attachments. These are all subjective and could all be described as a matter of opinion.

“porn” is really not properly defined, and will always be subjective. To a foot fetishist, pictures of naked feet are pornographic as they can “cause sexual excitement”; whether that is the intention of a post cannot easily be determined (and is probably irrelevant).

“sexual” is again a matter of opinion, though it ought to be limited to text or images that refer to or show actual sexual acts or people who are clearly sexually aroused, as opposed to simply nudity, which has its own label. That distinction is good, provided it is used properly.

“nudity” may seem clear-cut to some people, but even that is relative to the context of the reader. In the majority of western culture, it seems to be defined by whether the genitals, anus, female nipples, and/or possibly the buttocks are visible in an image or video. However, in Islamic culture, it could refer to a female showing any part of the body between the ankles, the wrists and the neck.

This is the problem with moderation: everything you try to pin down is ultimately determined relative to the viewer of the content, rather than to the producer of it.

Labelling in use

Here is an example of the way it works in practice and some problems.

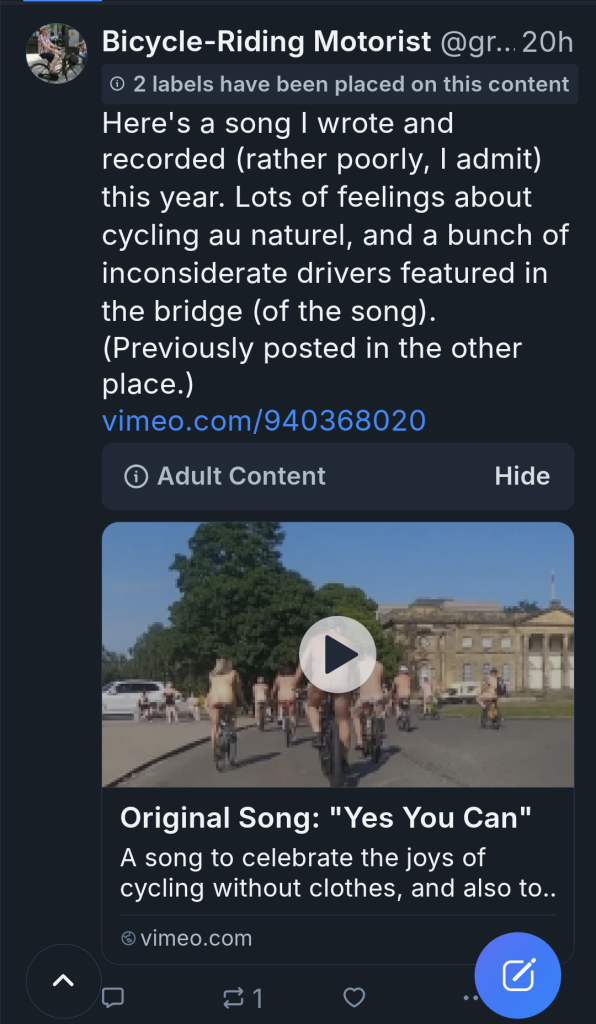

I posted a link to a video on Vimeo of a song that I wrote and recorded about nude cycling:

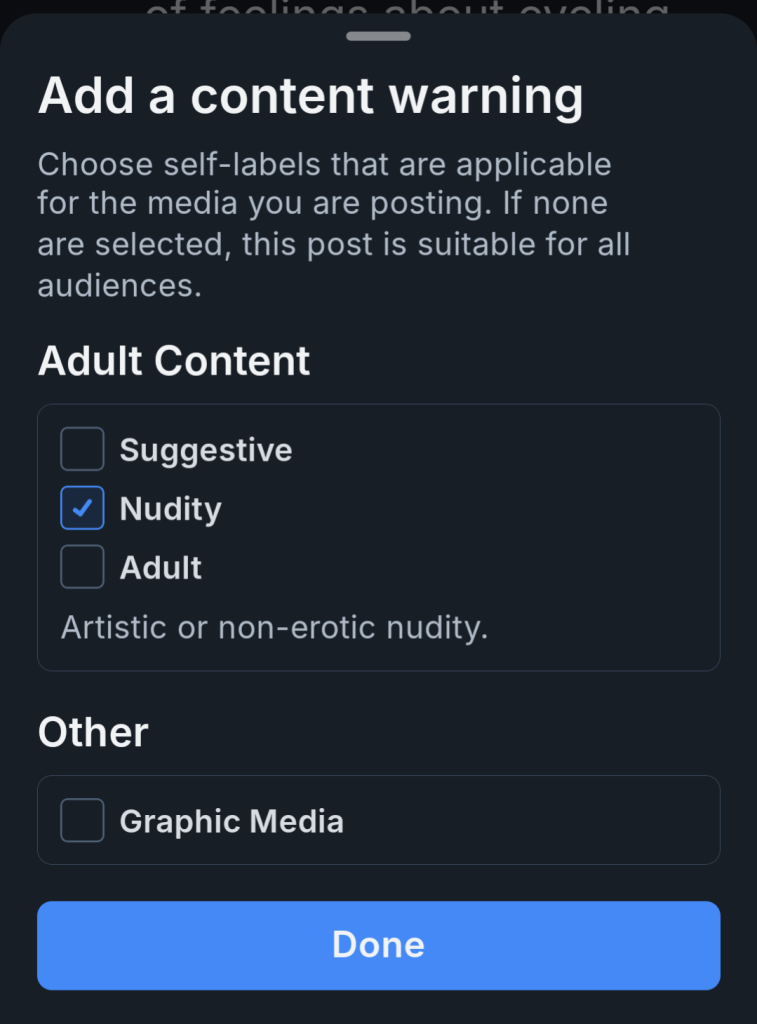

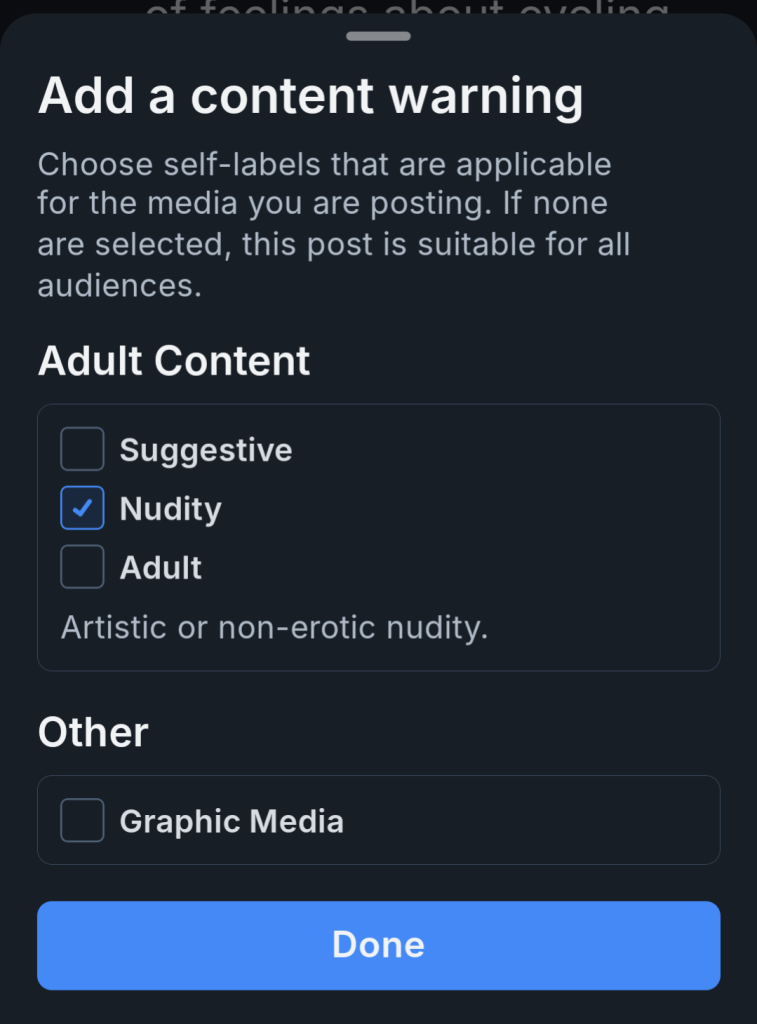

The video contains images of people cycling without clothes, and the preview image does, although it has already been blurred, so I self-labelled it as Nudity:

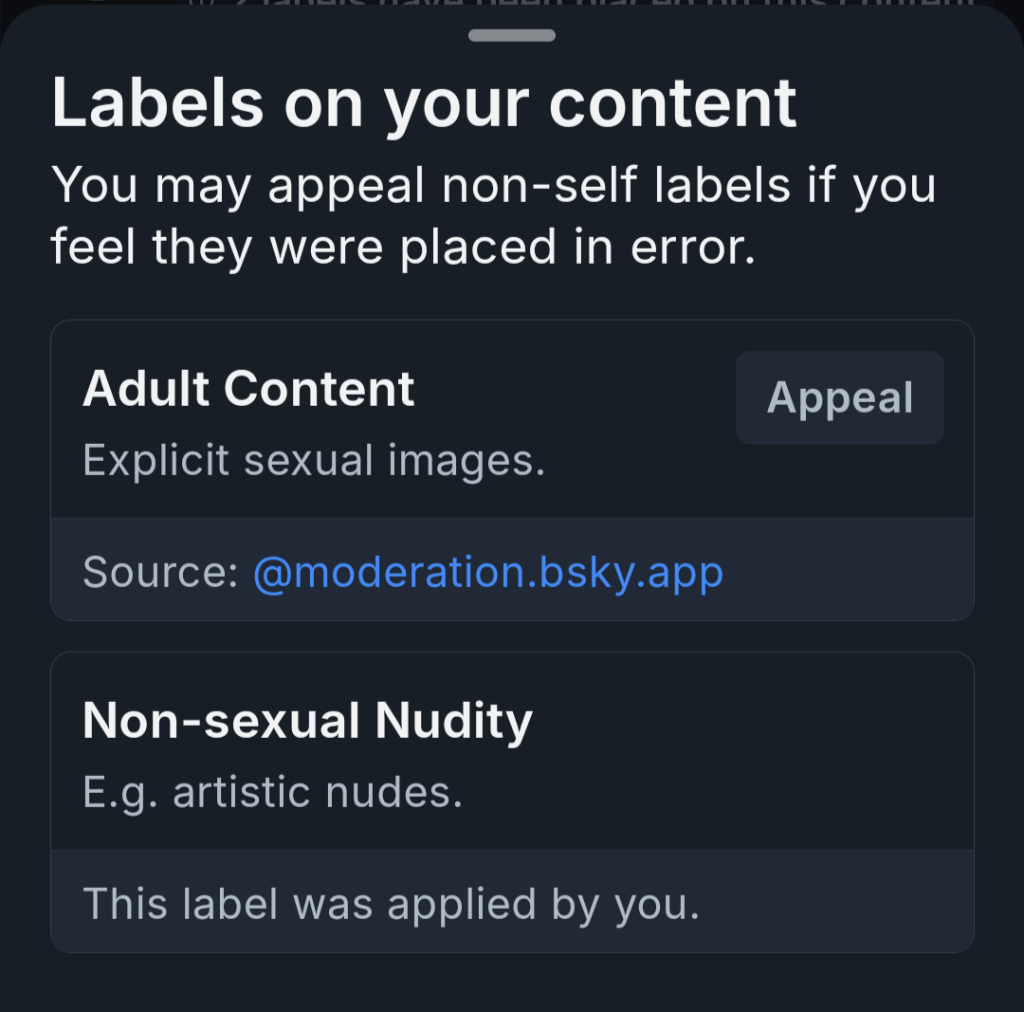

Immediately after the upload completed, the Bluesky moderation service added another label, flagged in the app screen as “Adult content” which, on closer inspection, means “Explicit sexual images”. This is what you get when you click on the text about labels at the top of the post:

However, I then appealed, stating that there is no sexual activity in the preview image, which is blurred anyway, or in the linked video, as cycling is not a sexual activity. A couple of days later, they had removed their label and just left my self-label on. There was no notification of the decision, but it’s good that the appeal system appears to work.

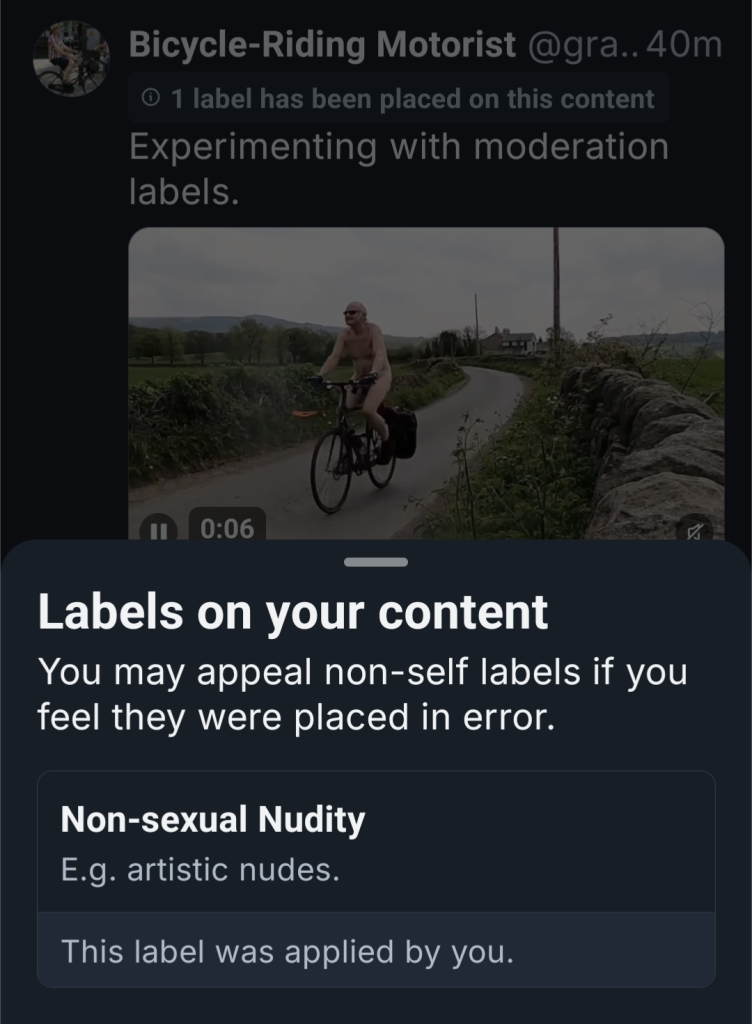

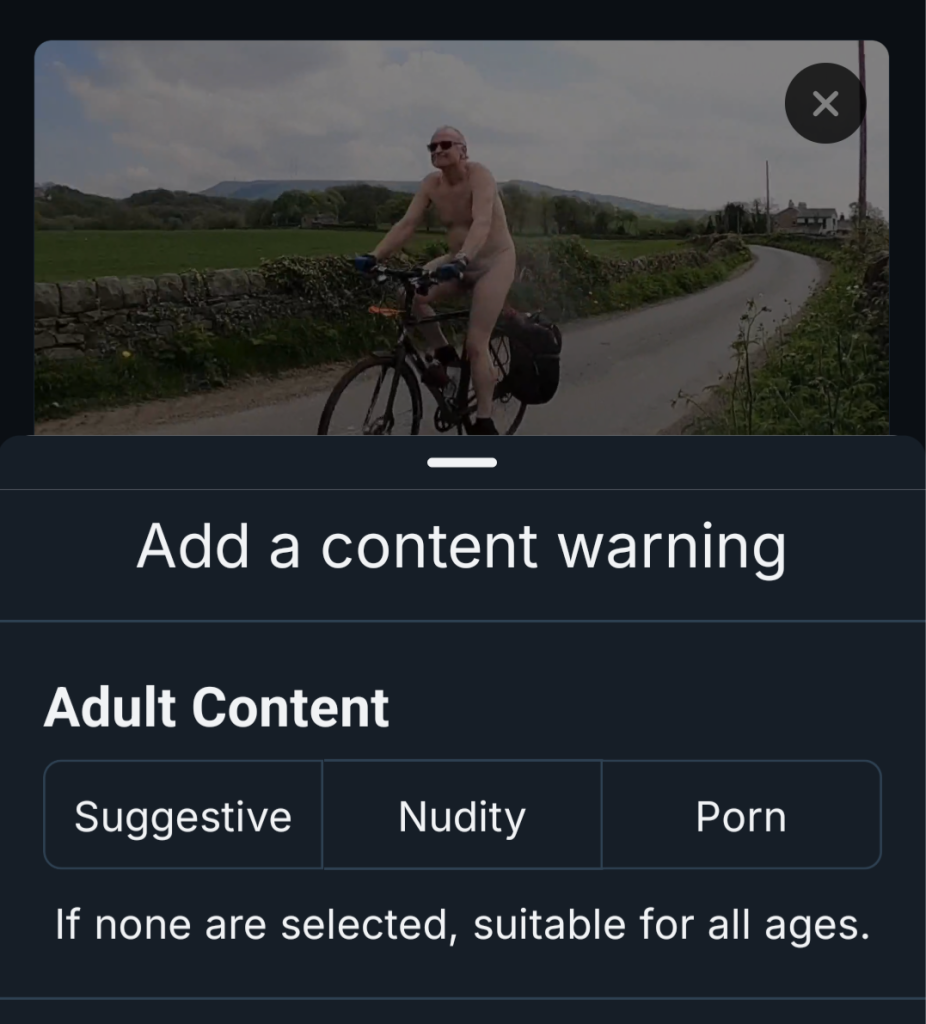

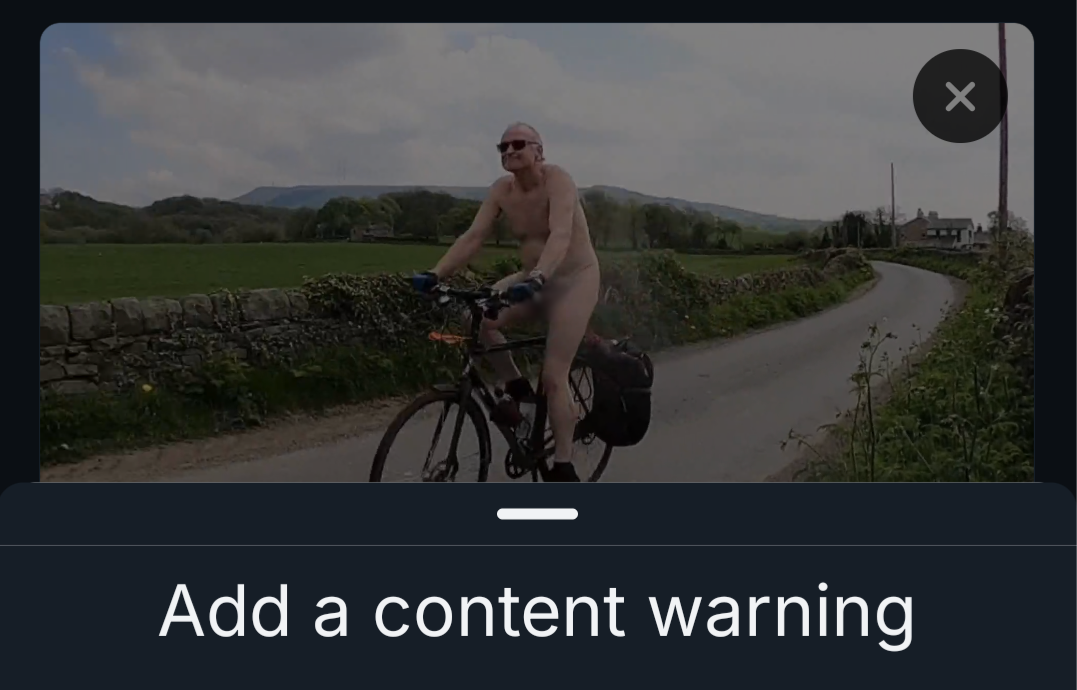

In another example, I included directly in a post a video of myself cycling past the camera without clothes on and self-labelled it as Non-sexual Nudity:

19 days later, this post still has only my self-label on it, so that is good also.

I note that other people have been experimenting to see what happens regarding moderation. For example, a user called Jess posted this in relation to her own images.

I looked at the ones that had been rejected on appeal, and there is nothing I can see to differentiate them from the ones that were accepted. They are all just beautiful pictures that just happen to have non-sexual nude bodies in them. Whilst it’s good to see that most of those appeals were successful, I guess once it gets down to it, it always depends on the prejudices of the person making the appeal decision.

Language matters

It is annoying that, in the Bluesky app’s dialogue box for setting self-labels, the Nudity label option is placed under a heading Adult content: that is a matter of opinion.

There is also much inconsistency in the language used to define things. For example, the option Adult appears under the title Adult content, which is weird, and is defined as “Sexual activity or erotic nudity”, and the option Suggestive is defined as “Pictures meant for adults”. It seems that whoever wrote this doesn’t understand what they are trying to do.

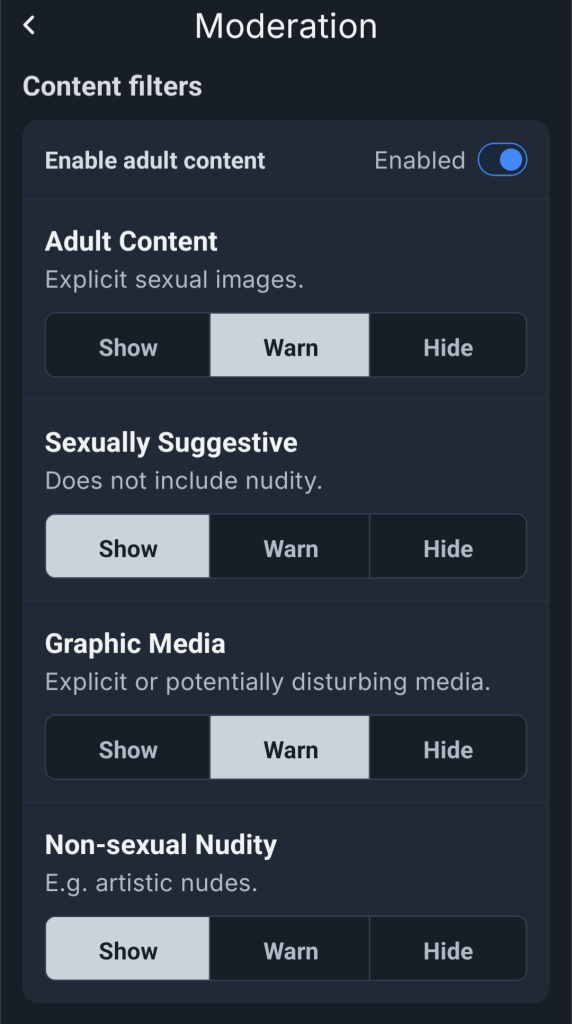

I find it annoying how inconsistent the terminology is in the app on all this. Here is the screen for selecting moderation options for content received:

Yet, in the screen for setting a self-label on an image or video, the following options are offered:

The terminology is totally inconsistent between these two dialogue boxes, and the latter is not consistent with the dialogue for self-labelling (test) content shown above.

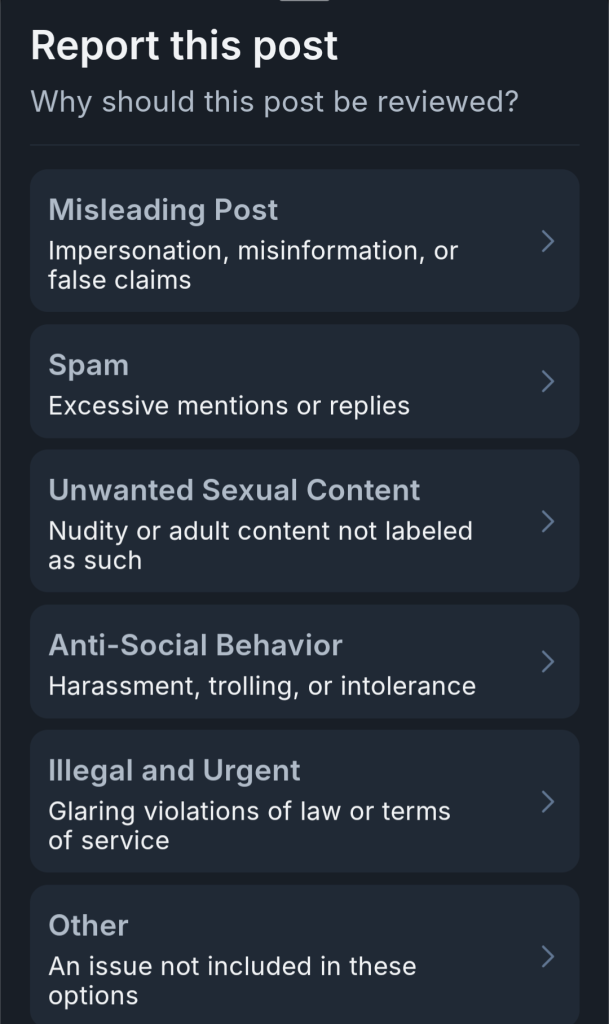

The dialogues for users to report content to the moderators are equally irrational:

So, in this case, all nudity is considered to be unwanted sexual content, and the only adult content is sexual. (Violent content doesn’t even get a look-in unless you create your own category.)

This degree of variation in the terminology only serves to cause more confusion, and completely destroys the good intention in the labelling scheme to distinguish between sexual content and non-sexual nudity.

So why bother about it?

It might be said “why not just set your app to allow all content so that it behaves like X?”. Well, this is mostly what I do, but there is still a need to have moderation. For example, somebody might want to protect children from pornographic content or content of a sexual nature whilst still allowing them to have access otherwise. In a family naturist setting, there could well be a desire to do this whilst not giving them the impression that there is something wrong with the human body by blocking all nudity. It is, therefore, important to make a clear distinction between these things.

Currently, it is annoying that most submissions featuring non-sexual nudity are labelled incorrectly. For example, how on earth does this post from the aforementioned Jess constitute Explicit sexual images:

Yes, it’s possible to appeal, but that is extra work. Looking at other naturist accounts on there, quite a few naturists appear to have come to Bluesky, done a few posts, and then stopped using it.

In conclusion, I do think that the approach adopted by Bluesky, with the AT Protocol and its moderation architecture, has the potential to provide the degree of choice that we want. However, that relies on the architecture being understood and adopted properly across all users rather than just falling back on the global label values. It will also depend on the creation of alternative apps to the Bluesky one that build on the AT Protocol; this is particularly important in relation to the “Global label values”. I’m not convinced that people can be bothered to do that, though.

As a first step, I think it is important immediately that every naturist user appeals every inappropriate “Explicit sexual images” label that is applied to their posts. If this inappropriate labelling goes unchallenged, then it is likely that it will become an accepted norm.

One reply on “Thoughts on Bluesky’s Moderation”

Well writen & thought through, thankyou.